Bob Shaw’s 1966 short story “Light of Other Days” and 1972 novel “Other Days, Other Eyes” are built around the idea of “slow glass,” a substance through which light travels very slowly. The material was developed as a strong, lightweight safety glass for automotive and aerospace applications, but drivers of cars with the new glass installed suffer a higher rate of near-misses and collisions than usual, and the first plane with the glass in its cockpit windows flies straight into the ground on its first landing attempt. Only then is it discovered that the new glass delays the light passing through it by a fraction of a second, causing unfortunate lags in drivers’ and pilots’ perceptions and responses.

Digital cameras collect images in frame buffers before passing them on, and digital EVFs and monitors – especially those that resample images from their native resolution to a different size for display – may also process images by the frame, not by the pixel or scanline. In doing so, the images are delayed; the monitoring chain is a form of “slow glass”, and camera operators are reacting not to what’s happing now in front of the lens, but to what happened some time ago as shown on the monitor.

How bad is it? I have sometimes noticed a bit of lag on the LCD monitor or in the EVF of various cameras, but I hadn’t really tried to measure it before. This past week, with the kind assistance of local rental house Videofax, I decided to find out.

The Experiment

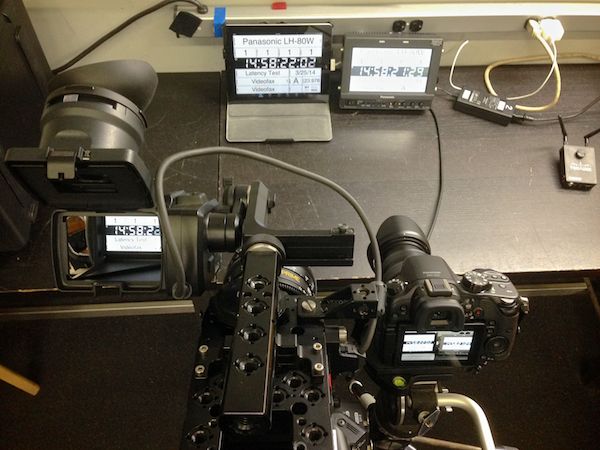

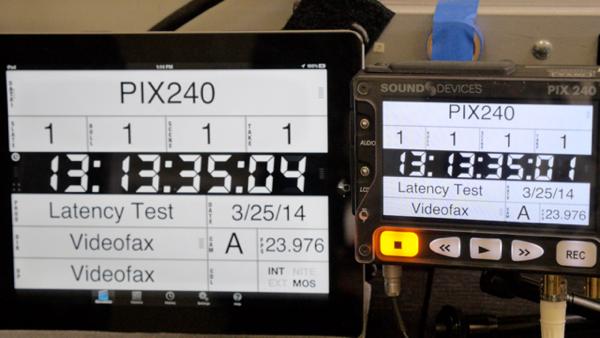

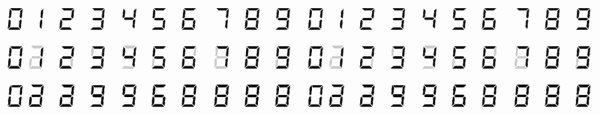

My plan was simple: I set up my iPad running Movie Slate as a timecode display, which I shot with Videofax’s Sony F55. The F55 fed a variety of field monitors and monitor/recorders, and I shot both the iPad and the monitor under test side-by-side with my GH3. Then, all I’d need to do was look at the GH3 footage, and compare the timecode numbers on the slate to the numbers on the monitor’s image of the slate; that would give me the total lens-to-LCD latency as a frame difference. Simple, huh?Well, maybe a bit too simple. The F55 wasn’t genlocked to the iPad, so there was no guarantee that it would shoot “whole” frames of a given timecode: the timecode might tick over in the middle of a frame, so I’d get a partial exposure of one number, and a partial exposure of the next number superimposed atop it. Indeed, this happened during a few runs, and the nature of a 7-segment font is that overlapped numbers are often ambiguous, as this graphic illustrates:

These overlapped digits can make it seem like a monitor is freezing and dropping frames, and displaying frames out of order: rather distressing.

The same lack of genlock applied to my GH3, of course, but it was running at 1080p60 with a 1/125 or 1/250 shutter; its finer time-slices and shorter shutter times made it easier to find whole frames in the 29.97 and 23.976 fps video being shot by the F55 (in retrospect I should have set the F55 to an equally brief shutter time, and saved myself some grief).

Instead of simply reading the numbers off a single frame, I wound up having to single-step through many frames of captured video, looking for unambiguous transition points, like the changing of the ten-frame digit from “1” to “2”. Were I to repeat this experiment, I could save myself a lot of work by using a common sync for the timecode slate and both cameras (which means using a real camera with genlock for the recording camera, not a GH3!), using short shutter times on both cameras, and/or by using an unambiguous time reference: one which won’t overlap or repeat digits, or one which uses a moving scale or pointer. I’ve used bigstopwatch for this in the past, and would do so again.

If you want to test your own cameras and monitors, learn from my mistakes, and do it right the first time!

I ran the tests at both 29.97 fps and at 23.98 fps. The F55 outputs progressive as segmented-frame video (in essence, progressive frames split across two fields), so the 29.97p also stood in for 29.97i. As we’ll see, some monitors appear to process on a field basis, not a frame basis, and that let them update their displays half a frame earlier than some of their peers.

I ran all monitors hardwired via SDI, except for the Zacuto Z-Finder EVF which used HDMI, and I also ran three wireless tests: I connected the Panasonic BT-LH80 using the Teradek Bolt and Bolt 2000 uncompressed wireless links, and I used a Teradek Cube to send h.264 compressed video to an iPhone.

I also ran many of the tests with and without the monitor’s focus assist engaged; if you’re using the focus assist function as a 1st AC, it’s useful to know if doing so adds any additional delay.

The Results

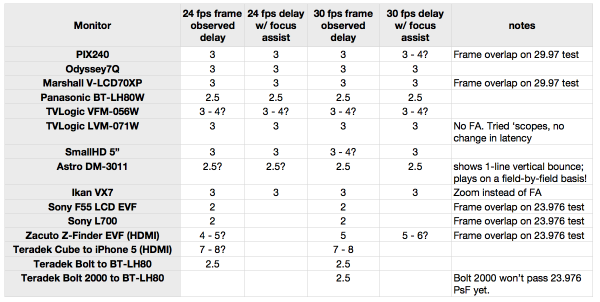

Where I’m uncertain of the results, I’ve given the range or results and/or a question mark; these are uncertainties that would be cleared up with the protocol changes I suggested above (an unambiguous test image; genlocking all the devices together).

In short, the soonest you’ll ever see an image shot with an F55 will be two frames after it happens; that’s with Sony’s own LCD EVF for the F55 or the L700 monitor.

The Panasonic and Astro monitors appear to lag an additional half a frame – one field of interlaced video, or of a PsF feed – behind, and the Astro monitor’s image bounces up and down one scanline on every frame; it appears to be updating its display on a field-by-field basis.

Most of the monitors display an image three frames in the past.

Turning focus assist on doesn’t appear to cause any further delays in any case (in the PIX240 and the Zacuto tests, I believe the possible increase I observed with focus assist is due to experimental ambiguity, as discussed above).

The Zacuto was an outlier, at 4-5 frames of latency. It’s the monitor, not the connection type; I confirmed this by hooking a common HDMI feed into the Odyssey7Q and the Zacuto; the Zacuto lagged the 7Q by two frames, just as it did when the 7Q was fed SDI instead.

“It was the best of times, it was the worst of times…” the iPad, two Sony monitors, and the Zacuto all at once!

The Cube showed 7 or 8 frames of delay: that’s 1/3 second at 24fps. This is not surprising, as the Cube has to compress its feed to h.264 to fit into a WiFi link, and the iDevice on the far end has to decode it. The surprise is not that the video is delayed; the surprise is that the delay is so minimal, all things considered.

Nonetheless, the Cube is almost universally rejected as a real-time operating link: operators and ACs refuse to rely on it due to its latency.

So, if we’re often operating with 2 – 3 frames of delay and not worrying, and 7- 8 frames is widely felt to be too much, what’s the tipping point? I have found the 4 – 5 frame delay on the Zacuto to be noticeable, but not (usually) problematic. Art Adams notes:

“I operate off monitors all the time … and almost never have a problem. The F35 was notable for having severe viewfinder delay, but I almost never shot with that camera so I don’t know how hard it was to work around. I remember another camera having similar issues but I don’t remember which one or whether it was a real problem.

“There’s a limit beyond which the delay is really disturbing to an operator, and the F35 pushed that limit, but I don’t know what it was. If I had to guess I’d say six frames, as it was really noticeable.”

Here’s a quick demo: the Panasonic monitor hardwired, and the same monitor via the Bolt with the Cube’s feed to an iPhone in the same shot. Don’t bother comparing timecodes; for this demo, I used a somewhat more obvious indicator…

It’s a bit disconcerting how even the 2.5 frame delay on the Panasonic seems like an eternity next to the real-world action. Yet we live with this sort of thing all the time, and for the most part don’t even register it… but when it’s pointed out, we often think it’s horrible.

The Take-Away

The bottom line? Much of the time, monitor latency isn’t an issue; our subjects aren’t bouncing around quickly enough that the visual delay is apparent or problematic. But that’s not always the case: shots where there are sudden and rapid changes in movement, like sports (think of following a golf ball when it’s struck, or staying in tight on a football player dodging through the opposing team’s defenders), even a 2 -3 frame delay will cause the operator to lag the action.

The solution? Know that what you’re seeing isn’t a live view; rather it’s a time-delayed replay. If you know something is about to happen, be ready to anticipate it before you see it – many savvy operators keep one eye on the EVF or monitor and the other eye on the real-world action, cueing their moves off of reality, not off the monitor.

Disclosure: I’m currently working with Sound Devices (makers of the PIX240) on future products, and I have an Odyssey 7Q on loan for review, courtesy of Convergent Design. I own the GH3, 5D Mk II, Zacuto EVF, Teradek Cube, and the iDevices and apps; all other equipment was supplied by Videofax, who also gave me space to work and assistance in setup (thanks again, folks!). None of the companies mentioned offered me any compensation or other considerations for a mention in this article, nor in any way influenced the outcome of my tests.

About the Author

Adam Wilt is a software developer, engineering consultant, and freelance film & video tech. He’s had small jobs on big productions (PA, “Star Trek: The Motion Picture”, Dir. Robert Wise), big jobs on small productions (DP, “Maelstrom”, Dir. Rob Nilsson), and has worked camera, sound, vfx, and editing gigs on shorts, PSAs, docs, music vids, and indie features. He started his website on the DV format, www.adamwilt.com/DV.html, about the same time Chris Hurd created the XL1 Watchdog, and participated in DVInfo.net’s 2006 “Texas Shootout”. He has written for DV Magazine and ProVideoCoalition.com, taught courses at DV Expo, and given presentations at NAB, IBC, and Cine Gear Expo. When he’s not doing contract engineering or working on apps like Cine Meter, he’s probably exploring new cameras, just because cameras are fun.

Adam Wilt is a software developer, engineering consultant, and freelance film & video tech. He’s had small jobs on big productions (PA, “Star Trek: The Motion Picture”, Dir. Robert Wise), big jobs on small productions (DP, “Maelstrom”, Dir. Rob Nilsson), and has worked camera, sound, vfx, and editing gigs on shorts, PSAs, docs, music vids, and indie features. He started his website on the DV format, www.adamwilt.com/DV.html, about the same time Chris Hurd created the XL1 Watchdog, and participated in DVInfo.net’s 2006 “Texas Shootout”. He has written for DV Magazine and ProVideoCoalition.com, taught courses at DV Expo, and given presentations at NAB, IBC, and Cine Gear Expo. When he’s not doing contract engineering or working on apps like Cine Meter, he’s probably exploring new cameras, just because cameras are fun.